Navigating the Legal Landscape of AI in AEC: What Design Tech Leaders Need to Know

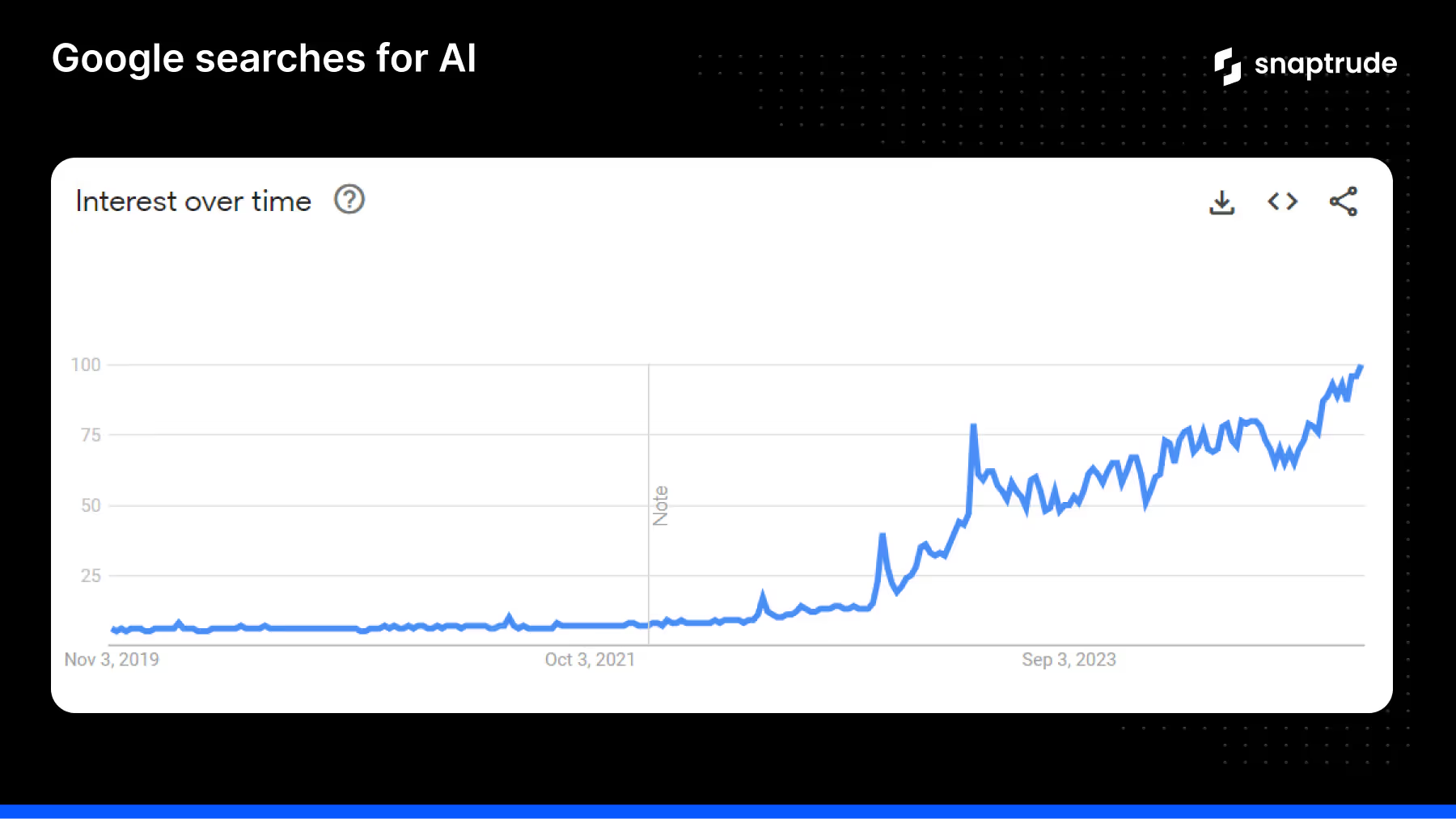

Just two years ago, AI was more science fiction than reality - something to joke about after making dystopian references to movies like I, Robot. But with OpenAI’s release of ChatGPT in 2022, interest in AI is at an all-time high as the technology rapidly reshapes industries from tech and law to healthcare and, yes, AEC.

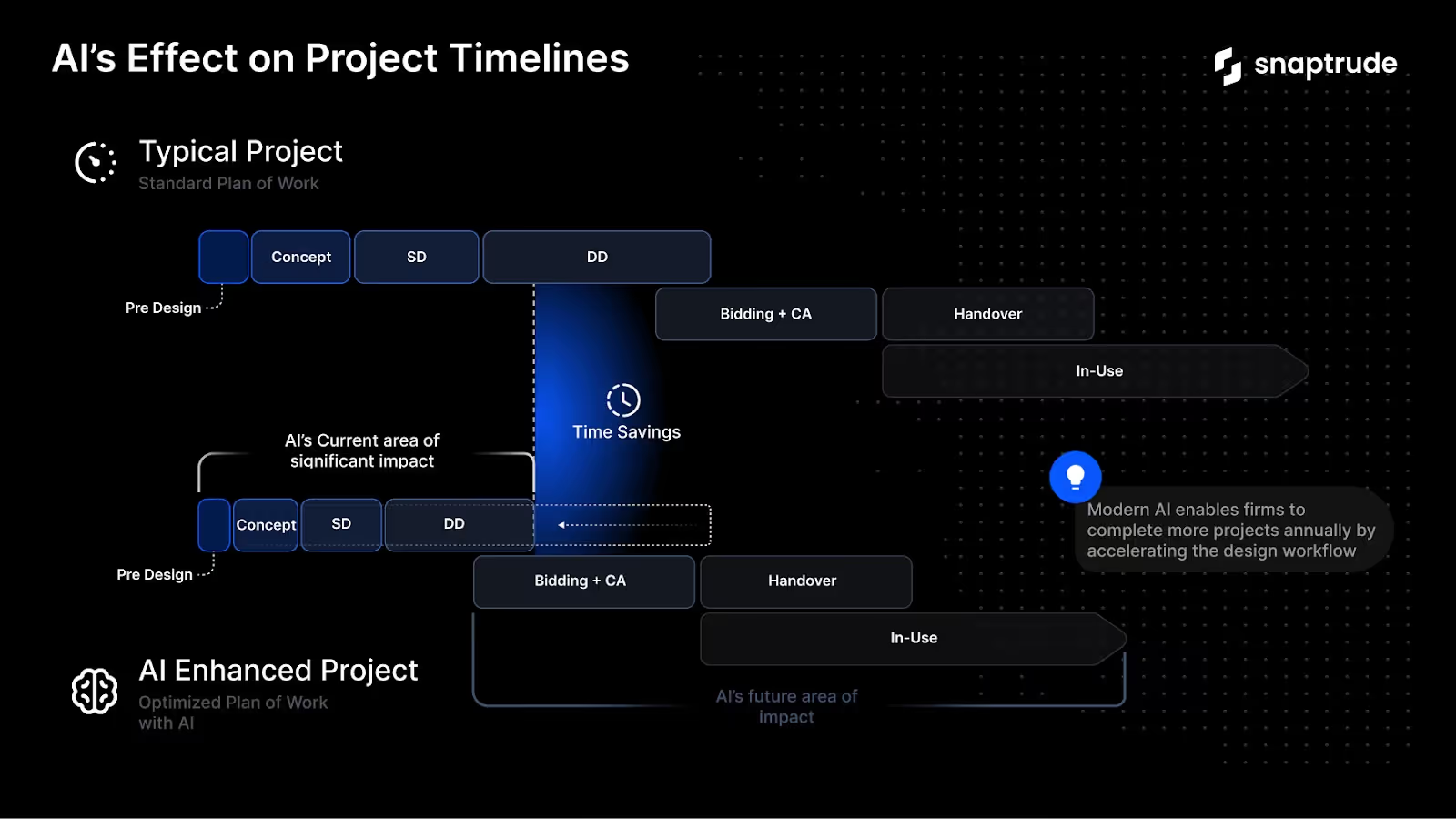

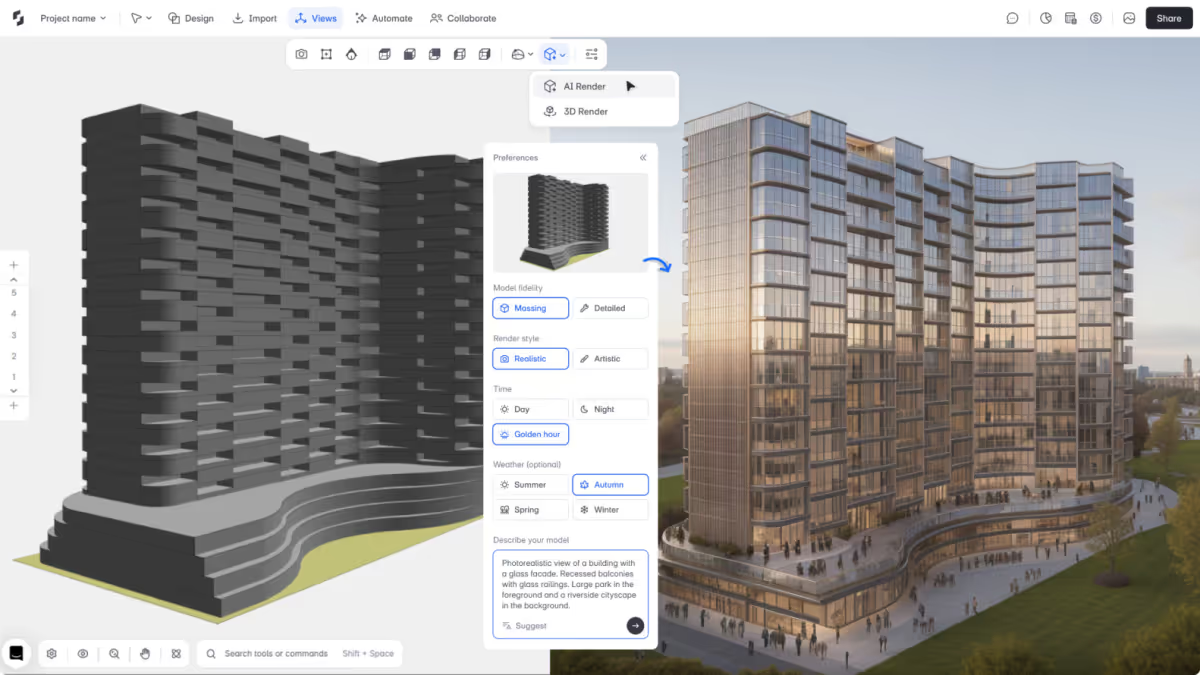

There’s an old saying: “When all you have is a hammer, everything looks like a nail” and with AI-driven design tools like Snaptrude, generative automation software like TestFit, and sustainability tools like Cove.tool, it’s clear that AI is profoundly impacting the AEC industry in more ways than just one.

Maybe, just maybe, everything is a nail?

That’s for you to decide, but with an array of superpowered AI tools available, it raises the question: When do you put up the red flag? And when do you sprint to adopt a tool that could supercharge your workflows?

AI, Ownership, and Intellectual Property

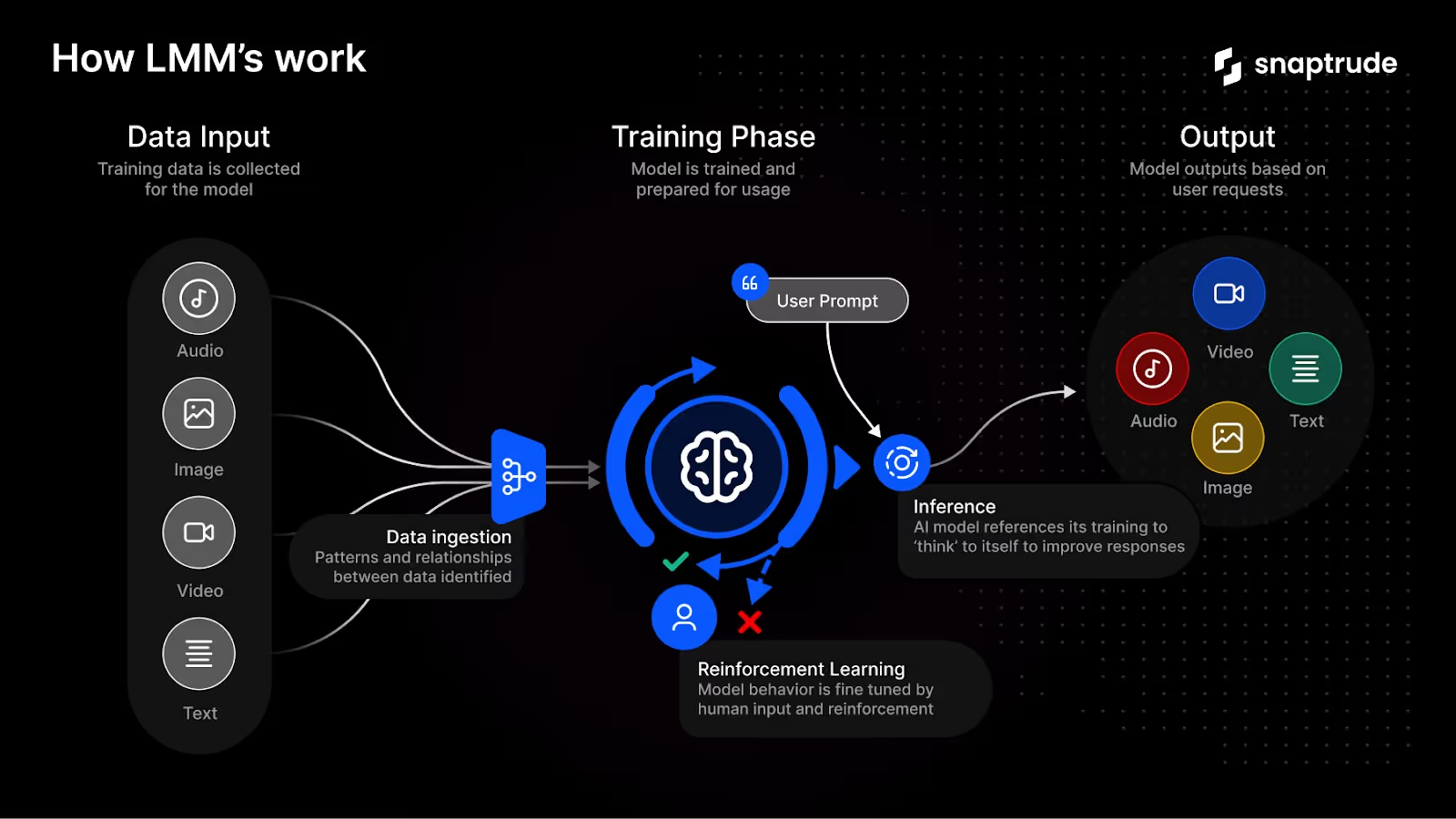

If you’re considering AI tools for your firm, understanding how these tools work is crucial. In a way - It’s similar to how children learn to talk; through repetition, confirmation, and parental guidance, they learn by absorbing information, then using it to speak and interact for themselves. Unfortunately for AI tools, they don’t have parents to teach them. Instead, they rely on Large Multimodal Models (LMMs) trained on vast datasets that include images, text, videos, and audio. This data is what powers AI modern tools - without it, these models would be no more insightful than asking a 3-year-old for advice on your project.

So, why does understanding these models matter? You’re just using the software, not building it, right? Here is where it gets a little more complex - it all revolves around one word: Data. Since these models train on existing data, questions about intellectual property (IP) and ownership become much more relevant than recent history.

- Do I own the rights to my AI-generated content?

- If a tool uses data it doesn’t have permission to, who’s liable?

- Which tools can I trust?

These are all valid concerns that deserve answers, and thankfully, outside of simply reviewing the Terms of Service (which is a great place to start), there are a few more ways to gain clarity.

Training Datasets

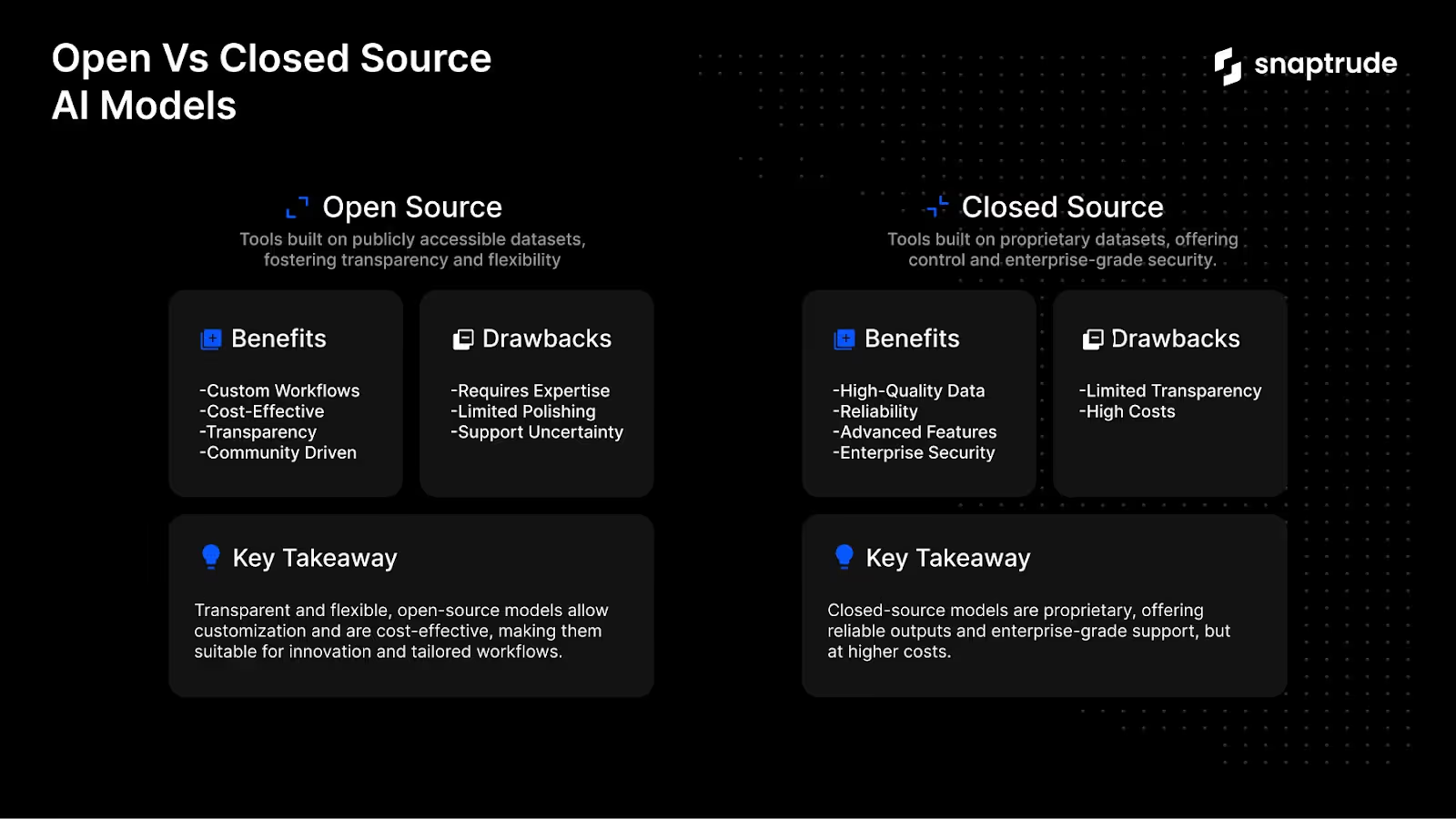

In early 2024, Adobe’s AI image generation tool, Firefly, came under scrutiny over misleading information about its training data sources. As a closed source model, they weren't required to share the training data, but the controversy does highlight the importance of understanding training datasets and the distinction between open and closed source models.

While each has their benefits and drawbacks - the simplest takeaway is this: Open source models make the underlying data available, but require experienced teams with the right know-how to best utilize that data. Conversely, closed source models do not provide access to the underlying data, but often have more advanced, turnkey feature sets. For a more detailed breakdown - check out the chart below.

Transparency and Trust

Ultimately, protecting your firm without hindering innovation comes down to trust. AEC software companies must offer clear terms, data practices, and transparency that enables trust, unlocks productivity, and eliminates legal ambiguity.

At Snaptrude, we understand that successful AI adoption requires transparency, data security, and IP ownership. Which is why snaptrude's built in AI-rendering uses open source data paired with AEC specific workflows to ensure data security and full ownership of user-generated content. As technology continues to develop - the team at Snaptrude is committed to partnering with firms across the world. So if you're a firm leader looking to help shape the future of AI in AEC - drop us a line; After all - We build for you.